The limitations of AI

AI is everywhere; but Nabil Hannan at NetSPI warns that it’s not as smart as you might think

Artificial Intelligence is everywhere - in the news, our products, and even our conversations about the future. From predicting our next movie to helping write a tricky email, AI feels pervasive and, to some, almost sentient.

But here’s the catch: AI is not intelligent in the way many of us think. Despite all the buzz, AI is far from sentient. Rather, it’s a sophisticated set of algorithms which are lightning-fast at processing data but constrained by what it’s been trained to do. AI doesn’t understand, learn, or innovate like a human. It just follows predefined rules and instructions.

We must understand what AI can and can’t do to maintain realistic expectations. This is particularly true for the cyber-security industry where over-relying on AI could have serious consequences if not used in the right manner.

Let’s examine why AI isn’t as intelligent as many believe, what the dangers of overestimating it are, and how understanding its natural strengths can unlock its true potential.

Understanding AI’s limitations

AI might seem brilliant on the surface, but it’s not thinking or understanding at its core. For example, AI can translate text between different languages almost instantly. However, it doesn’t truly understand the content or nuances of those languages. Instead, it manipulates symbols based on patterns seen during training.

Imagine training an AI system in English. It could become adept at performing various tasks in that language. However, AI would be clueless if you switched to another language without training it first. Conversely, a child exposed to English and French would naturally begin to understand and adapt. AI lacks this innate adaptability; it is confined to what it’s explicitly trained on.

Even some of the most advanced language models fundamentally operate by predicting what words should come next based on patterns in their training data. While these models can produce remarkably human-like text, their frequent struggles with accuracy and critical reasoning reveal a deeper truth - these systems may convincingly mimic understanding, but they lack the genuine ability to distinguish truth from fiction that comes naturally to humans.

The risks of overestimating AI’s abilities

A common misconception is that AI can solve complex, unfamiliar problems just as well as familiar ones. AI performs exceptionally well when encountering situations similar to those it was trained in. In cyber-security, for example, AI can quickly identify known threats. It’s trained on extensive databases of viruses and malicious patterns, so it can efficiently scan for those signatures and take action.

However, when it comes to novel threats, AI can struggle. Unlike a human analyst who can apply general knowledge and intuition, AI is blindsided by the unfamiliar. If a new kind of threat arises, AI might fail to recognise it, potentially allowing the threat to pass unnoticed. The danger here is over-reliance. If cybersecurity teams become complacent, it can lead to severe vulnerabilities. AI is a powerful tool, but it’s not infallible - human oversight is still crucial to fill the gaps.

The idea that AI can seamlessly replace human judgment is misleading. AI models often absorb biases from the data they are trained on, which can result in flawed outcomes. Earlier this year, concerns were raised in the UK about biased AI tools being used in the public sector. These systems were found to potentially reinforce existing inequalities, with evidence that they struggled with fair and accurate decision-making, particularly with diverse populations.

This reinforces that AI outputs are only as fair as the data used to train them. Relying on AI without human oversight risks creating biased systems, leading to harmful consequences in areas such as hiring, law enforcement, and healthcare.

Playing to AI’s strengths

So, if AI isn’t always perfect, how can we use it best? The key is to understand what AI does well. AI excels at automating repetitive tasks, crunching data, and finding patterns that would be challenging for humans to notice. These capabilities can be transformational when applied correctly.

In cybersecurity, AI tools can rapidly identify known threats by analysing vast amounts of data and spotting patterns that signal malware or other cyber-threats. For example, AI-driven systems can detect phishing attempts or recognise malicious code faster than human analysts, reducing the window of vulnerability.

Realistic expectations about AI’s capabilities allow companies to better integrate it into their processes. Instead of seeing AI as a solution for every problem, companies should view it as an augmentation tool that frees humans from repetitive work so they can focus on tasks that add more value to their work. This perspective makes AI more valuable, as it supports and enhances human effort rather than attempting to replace it outright.

AI needs a rebrand

While AI has been riding a wave of hype for years, it’s clear that it is not an intelligent being. However, by understanding its limitations, businesses and individuals can better utilise AI for what it truly is: a powerful data-processing and task-automation machine.

We need to shift our thinking from "AI as a brain" to "AI as a helpful assistant." This change in perspective helps us avoid over-reliance and make the most of what AI has to offer -speed, precision, and the capacity to support, rather than replace, human ingenuity.

AI is extremely efficient at processing data, yet it lacks true understanding and the ability to learn in a human-like way. This distinction is crucial because overestimating AI’s intelligence, particularly in critical areas like cybersecurity can lead to significant risks and gaps in judgment.

Ultimately, AI functions best when it works in tandem with human expertise, acting as an assistant that enhances rather than supplants human intelligence. It’s not a magical solution, nor a substitute for human thought.

By acknowledging both its strengths and limitations, we can unlock AI’s full potential and avoid the pitfalls of unrealistic expectations.

Nabil Hannan is Field CISO at NetSPI

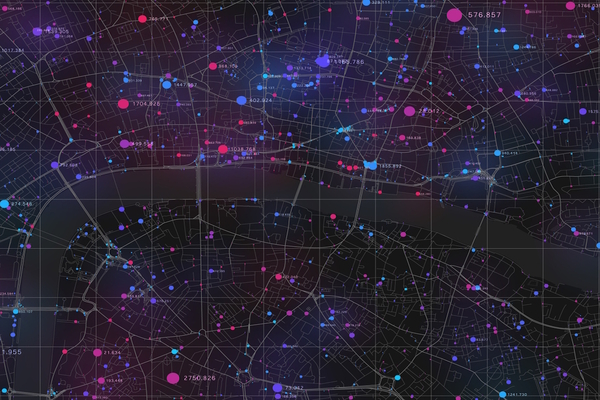

Main image courtesy of iStockPhoto.com and Andrey Suslov

Business Reporter Team

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2024, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543