The impact of generative AI on online fraud

Chris Downie at Pasabi explains the potential of generative AI to help criminals conduct online frauds and outlines the steps that organisations can take to protect their customers

The explosion of generative AI tools such as ChatGPT and Midjourney has grabbed the world’s attention. People are experimenting with them to see how good they are and businesses are exploring how they can be used to increase productivity and enhance the user experience.

Despite being in their relative infancy, these models are already impressing users with their capabilities and - somewhat alarmingly - are being adopted into the fabric of businesses at an unprecedented rate.

Whether it’s to augment marketing creativity or improve customer service chatbots, generative AI tools are being used across a variety of industries. McKinsey recently estimated that it could add the equivalent of $2.6 trillion to $4.4 trillion annually to the global economy.

In the first five days of ChatGPT’s release, over one million users logged onto the platform. In just four months, total monthly website visits went from 266 million in December 2022, to 1.8 billion in April 2023!

Dark side of AI

While many of us are excited by generative AI tools’ potential applications, it should come as no surprise that fraudsters are finding ways to exploit them. Just as generative AI is creating new ways to build honest businesses, scammers are learning what they can do with this technology too.

Fraudsters are weaponising these tools to be more productive and increase the scale and speed at which they operate. From creating deepfakes on dating sites, to writing fake reviews, generative AI just made scammers lives a lot easier and online fraud harder to detect.

Minimum effort, maximum reward

One of the challenges with having these tools in fraudsters’ hands is that with minimal effort, they can perpetrate their scams at a higher velocity than ever before.

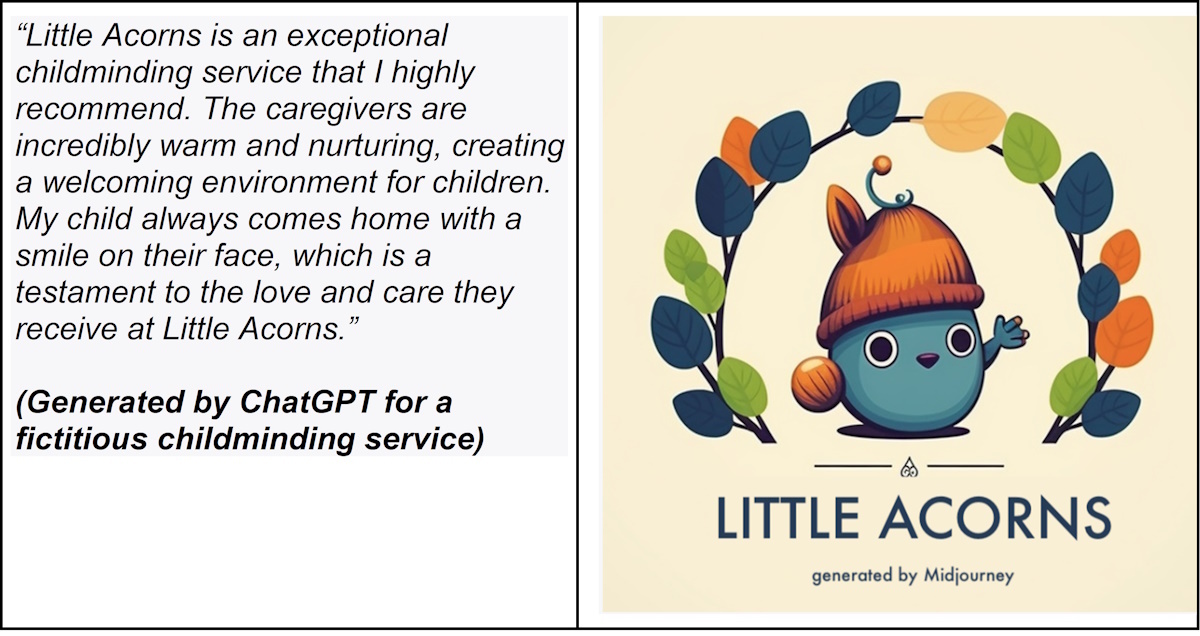

To illustrate, anyone can quickly create a fictitious childcare service, generate a logo, instruct a chatbot to write website copy and 50 positive reviews for a childcare business called ‘Little Acorns’. Within minutes, this new business has credible-sounding fake reviews.

The issue with this, of course, is that parents evaluating childcare options will be unaware they are fabricated. Their decision could then be influenced by fake information, they could leave their children with unvetted sitters in unsafe settings, with potentially dangerous outcomes. Similarly for choosing healthcare providers, pet services, holiday accommodation… the list goes on and on.

Keeping consumers safe from online fraud

Thankfully, there are measures businesses can take to help protect consumers from a surge in online fraud:

Fight AI with AI. As fraudsters use increasingly sophisticated AI tools to run their scams, so too must businesses to protect their customers from them. Manually reviewing data for signs of fraud is impractical - fortunately AI fraud detection tools can help. AI is designed to tackle large amounts of data very efficiently to detect potential threats more easily.

Focus on behaviours, not output. However, AI fraud detection tools alone can’t do the job. The ability of generative AI tools to generate images, videos, content and music from text means authenticity is much harder to determine if focusing on content outputs alone. Take, for example, the German artist who won the 2023 Sony World Photography Award. He refused the award, revealing his submission was actually generated by AI.

Users’ behaviour provides useful insights. In the case of fake reviews, for example, it’s more helpful to consider where the review came from, when it was posted and who it benefits than analyse the review content alone.

Consider someone reviewing a physiotherapy clinic in Aberdeen and a coffee shop in Aberystwyth on the same day with an IP address geographically distant from both locations. This is highly suspicious and could be a sign of a fabricated review. This is not something that would not have been apparent solely from the review content.

Collaborate, innovate, educate. Key to tackling the growing challenge of online fraud is for AI researchers, fraud experts and law enforcement agencies to work together to share intelligence to identify emerging threats and trends.

Collaborative research projects can help understand specific fraud challenges, and bridge the gap between academia, industry and law enforcement. This helps inform the development of sophisticated fraud detection tools to enable all parties to keep one step ahead. Schemes, such as Call 159, are designed to educate consumers about what to do if they suspect they’ve been targeted by scams.

A future powered by generative AI

Generative AI tools promise greater advancements. No one can deny that AI tools currently perform impressively and they are only going to get better; it’s hard to predict where we’ll be in just 6 months’ time!

What is certain, however, is that bad actors will continue to exploit them in ways we’re not even aware of yet. AI researchers, enforcement agencies and fraud experts need to combine their expertise and resources to create a safer digital environment for individuals and businesses alike.

Fraud prevention tools spot the signals. While the quality of outputs from generative AI tools will likely improve, the behaviours of humans using them will be slower to change. Fraudsters will continue to unwittingly leave behind digital cues that fraud prevention tools can track to detect and stop them.

Balance fraud prevention with privacy protection. Striking the right balance between fraud prevention and privacy protection requires careful consideration of ethical principles and legal frameworks, alongside ongoing monitoring and evaluation of AI systems to ensure they align with societal values.

Preserve authenticity to reinforce trust. Authenticity matters when it comes to reviews. Without it they are worthless, and in the case of healthcare, childcare and pet services, potentially very dangerous.

As society and governments debate what guardrails or regulations are needed, businesses must focus on using proven solutions that combine AI and behavioural signals, to keep their platforms free from harmful influence and as safe as possible for their users.

Chris Downie is CEO of Pasabi

Main image courtesy of iStockPhoto.com

Business Reporter Team

Most Viewed

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2024, Lyonsdown Limited. Business Reporter® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543